Page Contents

Editors’ Desk

Fragmented global discussions on AI’s impact on human rights hinder effective governance in corporations, governments, and international organizations. We address this challenge by creating the AI & Human Rights Index—a publicly accessible website that comprehensively maps AI’s ethical and legal effects on human rights across societal sectors. It will feature over 100 encyclopedia articles, 200 glossary terms, and filterable databases of human rights instruments spanning eight decades. Using a co-intelligence methodology—which synergies human expertise with AI tools for collaborative problem-solving—AI ethicists, human rights experts, and technologists from the United States, Germany, and South Africa are building this resource before the public’s eyes. Emphasizing harm prevention (nonmaleficence) and promoting beneficial outcomes (beneficence) throughout the AI lifecycle, the Index fosters legal and cultural literacy among technologists, regulators, academics, and the public. By mapping guiding principles, informing policy development, and fostering inclusive global dialogue, the Index empowers stakeholders to align technology with human rights, filling a critical gap in responsible AI governance worldwide.

I. Motivations

The global conversation around artificial intelligence (AI) and human rights can be fragmented and inconsistent, and a necessary depth of knowledge is lacking to effectively evaluate AI’s impact. AI is transforming every sector of society, from healthcare to law enforcement to education, and poses risks and opportunities for human and environmental rights. In the face of uncertainty, governments and regulatory bodies have struggled to keep pace with abrupt technological advances, lacking comprehensive legal mechanisms to ensure that AI systems and the societal sectors that use them are held accountable for upholding inalienable rights. Stakeholders, including technologists, regulators, researchers, and journalists, often engage in isolated conversations about AI, relying on their own vocabulary and disciplinary assumptions, which prevents them from fully understanding each other’s perspectives or trusting their motives.

In response to these trends, responsible tech movements have articulated an inspiring medley of guiding principles to help shape this global conversation; however, there is no unified framework or publically accessible resource that maps AI systems to all human rights across cultures—the inalienable freedoms afforded to every person. For the last eight decades, the global community has turned to human rights principles to address the urgent questions that society has faced. Now is the time to draw upon that multigenerational wisdom to cultivate legally and culturally literate stakeholders that can promote the alignment of AI and human rights and specify the accompanying responsibilities.

As developers of the AI & Human Rights Index, we assert that understanding need not imply agreement; rather understanding is achieved through interdisciplinary and public engagement. By fostering deeper insights into this complex and rapidly evolving subject, we intend to help societies keep pace with AI’s ethical and legal challenges by publishing an evergreen document that will be strong enough and flexible enough to inform and inspire the current and future generations. We are committed to closing the regulatory gap by showing that the perennial debate about innovation versus regulation is a false duality that closes conversations. The Index will provide timely and generative resources to elevate our collective conscience about each sector’s role in experiencing technological innovations and shared governance with the ultimate goal of protecting and promoting human rights.

Human rights are good for business, and technology can benefit humans and the earth; human rights are also good for corporate governance, and governments can be stewards of humanity and the environment. These aspirations manifest when we foster legally literate people across sectors who can make informed decisions. By demystifying complex technical and legal concepts and their ethical implications, the Index will empower diverse audiences to make human rights not a political slogan but a way of life.

The Index will build upon a broader ecosystem of initiatives aimed at addressing the intersection of AI and human rights and is also led by the project team members. It aligns with and supports current efforts to create an International Convention on AI, Data, and Human Rights, which emerged from the International Summit on AI and Human Rights held in Munich in July 2024, involving more than 50 international AI and human rights experts. The Convention intends to initiate a global discussion about a binding UN-based convention to anchor human rights in the international governance of AI. The Index will support this effort by providing an important informational resource of concrete examples of adverse human rights impacts in AI and comprehensively highlighting relevant sectors and contexts of AI research, development, and use that warrant greater attention from a human rights perspective. Furthermore, by creating an Index where linkages between AI and specific human rights impacts are curated and made available to relevant stakeholders, the Index will enhance the interpretation of existing frameworks within and beyond the United Nations. The Index will, thus, act as a tool for all organizations involved in the AI lifecycle, such as developers, private and public deployers of AI, and regulators, to help monitor the adverse and positive impacts AI has on human rights.

II. Research Questions

III. Methodologies

Inspired by the solutions-based journalism movement, our editors employ a Solutions Scholarship methodology in publishing the AI & Human Rights Index. We mindfully consume the urgent discourse surrounding the documented dangers and ethical breaches associated with the development and deployment of artificial intelligence. We take an intellectually honest approach when documenting the specific human rights that AI systems have violated and may violate. While it is critical to elevate the public’s awareness of these concerns, it is equally important to explore how AI has been and could be harnessed to promote and protect human rights. By adopting a solution-based research method, we intend to present a balanced narrative that not only issues warning signals but also proactively identifies constructive ways forward. To achieve this dual focus, our research team conducts evidence-based analysis to assess the impact of AI on human rights. Ultimately, by applying Solutions Scholarship, our research identifies the legal floor on which all AI systems must stand while calling stakeholder’s attention to the soaring ethical ceiling above them. In doing so, we help rebalance the global conversation about AI, charting the well-documented challenges while uplifting the significant opportunities AI presents in advancing human rights.

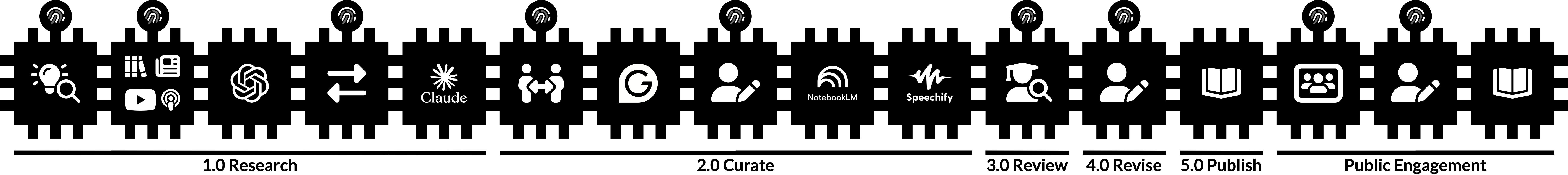

Also at the heart of our research design is a co-intelligence methodology—a collective process emphasizing cross-cultural collaboration and the harmonious integration of human and technological systems. This analytical approach recognizes that knowledge and insight are not confined to individual humans or particular disciplines or civilizations but are enriched through global cooperation and dialogue across legal, cultural, and disciplinary systems supported by AI technologies. The Index editors apply the co-intelligence method by first building diverse human resources. Our international research team is composed of scholars in various disciplines from the United States, Germany, and South Africa, each of whom brings unique lived experience and subject-matter expertise to the project. Our cultural and disciplinary diversity enables us to create conceptual maps of AI and human rights from various perspectives, enriching the depth and breadth of the Index. Together, we mitigate biases, expand our understanding, and widen our circles of compassion. By employing a co-intelligence workflow, the editors ensure that humans are “in the loop” at every stage of the editorial process when using AI technologies (see infographic below). Not just any humans—our diverse subject-matter experts have the editorial expertise to draft, evaluate, refine, and confirm the authenticity and authority of the co-intelligence produced. We assert that human editors are fully accountable for the content created at each stage of our workflow, with particular attention given to editors, contributors, and peer reviewers having the authority and responsibility to rigorously embody the following ethical frameworks.

IV. Ethical Frameworks

We base our editorial workflow on the following ethical principles that serve as a compass as we chart the complex waters of artificial intelligence and human rights. These principles ensure that our work upholds the highest standards of integrity, starting with human dignity and agency.

- Human dignity and agency are the cornerstones of our editorial values. We are steadfast in our commitment to uphold human dignity and agency in every aspect of our work, ensuring that our Ends and the Means we employ honor the inherent worth of every person. We recognize that everyone has inherent worth and the inalienable right to conscience. By placing the human at the center of our editorial process, we make editorial decisions that honor and uphold the dignity and agency of every person while ensuring that our living publication reflects and reinforces everyone’s fundamental rights.

- Legal literacy is a civic competency that is the primary outcome of the public engaging with the AI & Human Rights Index. Our mission extends beyond merely presenting legal or technical information; we aim to cultivate the public’s legal literacy so that they champion human rights at every stage of the AI lifecycle. We strive to enhance public understanding of these critical questions, helping foster legally literate societies capable of making informed decisions. By demystifying complex technical and legal concepts and their ethical implications, we seek to empower our diverse audiences to make human rights not a political slogan but a way of life.

- Cultural literacy and fairness are central to our mission. We actively engage diverse voices from various geographic and disciplinary backgrounds to mitigate bias in AI and human-generated content. This commitment to cultural literacy and fairness ensures that our content represents multiple perspectives equitably and respects the rich diversity of human experiences. We embrace cultural literacy and fairness by involving diverse contributors and reviewers, ensuring our content is balanced, inclusive, and free from bias.

- Accuracy and integrity are paramount in our pursuit of creating an authoritative publication. We are preparing a precise, well-researched, and reliable map of AI and human rights. By maintaining rigorous standards of academic integrity, we ensure that our content is trustworthy and respected within scholarly and professional communities. We uphold the highest standards of accuracy and academic integrity, meticulously verifying our information and transparently disclosing our use of AI in our research to maintain the credibility and reliability of our publication.

- Accountability is integral to our editorial process. We maintain clear responsibility for all content through comprehensive human oversight, ensuring that every piece of information meets our stringent ethical and quality standards. Our editorial board, contributors, and peer reviewers uphold this accountability at every stage. We hold ourselves accountable for the integrity of our content, with human experts overseeing every step to ensure accuracy, fairness, and ethical compliance.

- Transparency in academic publications, especially communal ones like this, is necessary when producing knowledge in the age of AI. By openly disclosing the involvement of AI in our editorial processes and clearly outlining our methodologies, we intend to build trust with our audience. Transparency allows readers to understand how we created and revised the content by fostering an environment of collegiality, honesty, and mental flexibility. We are committed to editorial transparency, clearly communicating the role of AI and our editorial processes to ensure our audience is fully informed about how our diverse team developed this living publication.

- Solution-based research is central to our mission. We focus on creative and constructive problem-solving rather than merely identifying challenges. We are not only interrogators but generators of ideas that build up rather than tear one another down. We aim to generate actionable insights and ideas that can be adopted and adapted within various contexts, contributing positively to AI and human rights. We prioritize solution-based research, seeking innovative and constructive approaches to address the complexities of AI and human rights.

We embody these ethical frameworks when taking the following five steps in developing this living publication: After the research stage, we curate educational content for public use, receive peer reviews from subject-matter experts, and integrate their feedback and ideas offered by the general public when revising and publishing the AI & Human Rights Index.

V. Editorial Workflow

The project’s final deliverable is the publication of the AI & Human Rights Index on this publicly accessible website. To achieve this, faculty editors and graduate student authors will engage in the following five-step editorial workflow that prioritizes human intelligence within a collaborative process, symbolized by the following microchip infographic marked with a fingerprint icon indicating where human insight and oversight. Each article page will display Edition labels and invite public commentary.

Please note how the fingerprint icon represents human input at each stage.

Articles that are labeled “Edition 1.0 Research” illustrate how researchers are gathering and analyzing human rights data from diverse sources such as legal databases, academic libraries, books, articles, multimedia, and internet content. This phase establishes a strong ethical and legal foundation for the publication. Bias audits will ensure drafts are diverse, balanced, and fair. AI tools like ChatGPT and Claude will assist in brainstorming, categorizing, posing questions, refining glossary entries, and, most importantly, analyzing eight decades of human rights law. For example, AI will be used to search through large amounts of unstructured data or relevant texts to identify lexical patterns and guide researchers. Researchers will use these AI tools to peer-review the synthetic and human responses, with subject-matter experts probing and refining the results. During this stage, editors will begin forming conceptual maps and ethical positions and producing outputs related to the research questions that define which rights, principles, and instruments to include and which societal sectors and terminology should be featured.

Articles labeled “Edition 2.0 Curate” illustrate how editors will cultivate accessible articles that engage readers’ moral imagination and multiple intelligences. Contributors will utilize AI tools for legal citation annotations, terminology evaluation, and thought experiments, while applications like Grammarly will assist with copyediting and NotebookLM and Speechify will assist with creating companion educational materials and audio guides. Human editors will cross-verify all AI-formatted citations and multimedia against primary sources to ensure accuracy.8 This process demonstrates how the ethical use of technology can enhance readers’ moral and intellectual development while making the content accessible to diverse audiences.

Articles that are labeled “Edition 3.0 Review” illustrate how at least two subject-matter experts from diverse geographical, cultural, and disciplinary backgrounds will evaluate the content. A volunteer review panel will conduct bias audits and adhere to fact-checking protocols. Experts in law, technology, and the humanities will peer-review each article to ensure accuracy, relevance, and timeliness. Reviewer profiles will be featured on each page, highlighting their qualifications and contributions.

Articles that are labeled “Edition 4.0 Revise” illustrate how editors will incorporate feedback from domain experts and the general public to enrich the quality and credibility of each article, with specific attention to revising the ethical standpoints presented. Grammarly will support the copyediting process, ensuring adherence to our editorial style guide and professional standards. Human editors will prioritize the authors’ intent and the accuracy of the information presented, while AI tools like Google Docs and GPTZero will track revisions. Each version will document feedback from contributors, reviewers, and editors to ensure engagement and transparency.

Finally, articles labeled “Edition 5.0 Publish” are published and open for public use. Editors will continue to solicit reader feedback on each webpage, encouraging readers to rate the article’s effectiveness and suggest changes. Using filtering technology to categorize feedback and identify recurring themes, we will adjust our editorial policies and practices to respond to public input, evolving technological advancements, and ethical and legal standards.

Overall, this efficient co-intelligence workflow ensures that humans remain “in the loop” at every stage of the editorial process. Our diverse team brings the editorial expertise to draft, evaluate, and refine content, ensuring authenticity and authority. The workflow involves comprehensive data collection across legal databases, disciplinary resources, and societal sectors, identifying how AI can both violate and advance human rights. By leveraging this collective wisdom through our co-intelligence methodology, we create a synergistic research environment where diverse human insight and AI research technologies enhance one another. This rigorous, multidisciplinary approach allows us to produce a timely and timeless contribution to the global conversation—one that none of us could have accomplished alone.

VI. Standpoints

Sometimes, the editorial board will take a strong ethical stand on specific subjects, such as asserting our commitment to human dignity over other values, such as efficiency in hiring or court sentencing. As a result, the AI & Human Rights Index includes ethical complexities and positions that would otherwise be lost by conducting a purely neutral literature review. We believe in exercising human agency by claiming distinct positions and using our authority to assert our unique standpoint on the moral and legal issues of our time.

These multilayered editorial stages reflect our ethical commitment to rally diverse voices, including subject-matter experts from various fields, to leverage technological advancements in social science research to produce someone none of us could have achieved alone. We are committed to prioritizing human dignity, agency, and oversight at every stage of the process. We value public engagement and are committed to co-creating a robust foundation of trust and credibility to ensure that the AI & Human Rights Index is a timely, reliable, and authoritative resource. We look forward to integrating your feedback in future editions.

VII. Imapct

The AI & Human Rights Index will map how AI intersects with each human right, serving technologists, regulators, academics, journalists, and the general public. Technology companies can use the Index to train their developers on how AI can violate or advance specific human rights. Regulators can use it to understand the AI’s interaction with rights, identify instruments and sectors, and recognize the need for new laws. Academics can enrich education in technology, law, social sciences, and humanities by assigning learners this free digital, living textbook. Journalists will help cultivate public understanding of these urgent issues. Ultimately, all audiences may use the Index as a conversational partner, collectively contributing to this evolving resource.

The Index bridges ideological divides by mapping legal and ethical issues to address the public’s illiteracy about AI and human rights. The current discourse focuses on preventing harm, and few guiding principles promote AI for the good of society and the environment. Balancing these perspectives, the Index shifts the conversation from merely mitigating risk to proactively advancing particular human rights in specific sectors. It seeks to transcend divisive language, grounding diverse views and inspiring mental flexibility and ethical empathy.

As AI integrates into daily life, people need accessible tools to understand its impact on human dignity and fundamental freedoms. This dynamic educational website will cultivate the public’s legal and cultural literacy, illuminating the intersections between technology, law, and ethics. We achieve this by framing the Index as a public training and education tool, serving as a regulatory guide for corporate governance and internal audiences, and enabling governments and nonprofits to systematically monitor AI’s impact on human rights.

The Index will define applicable legal instruments, countering the notion of a regulatory void and identifying where new laws and ethical frameworks are needed. As world religions scholar Huston Smith said, the “century’s technological advancements must be matched with comparable advances in human relations.”

VIII. Citations

As a living document, we highly recommend that the public only cite articles reaching the Edition 5.0 stage. If users want to cite an article before it’s published, we recommend explicitly identifying which version of the article (e.g., 1.0, 2.0, 3.0) when citing an article.

For example: “II.A. Right to Peace, Edition 3.0 Review.” In AI & Human Rights Index, edited by Nathan C. Walker, Dirk Brand, Caitlin Corrigan, Georgina Curto Rex, Alexander Kriebitz, John Maldonado, Kanshukan Rajaratnam, and Tanya de Villiers-Botha. New York: All Tech is Human; Camden, NJ: AI Ethics Lab at Rutgers University, 2025. Accessed February 14, 2025. https://aiethicslab.rutgers.edu/Docs/iii-a-peace/